docker ⚓︎

Resources

- Docker docs! The starting point

- Docker and Kubernetes complete tutorial, a very detailed playlist from beginner to advanced level in both Docker and Kubernetes - it's also a Udemy course, by the way

- Dev containers, a playlist from the VS Code YouTube channel about containerized dev environments

- Docker Mastery Udemy course on Docker and Kubernetes, by a Docker Captain

- 15 Quick Docker Tips

Concepts⚓︎

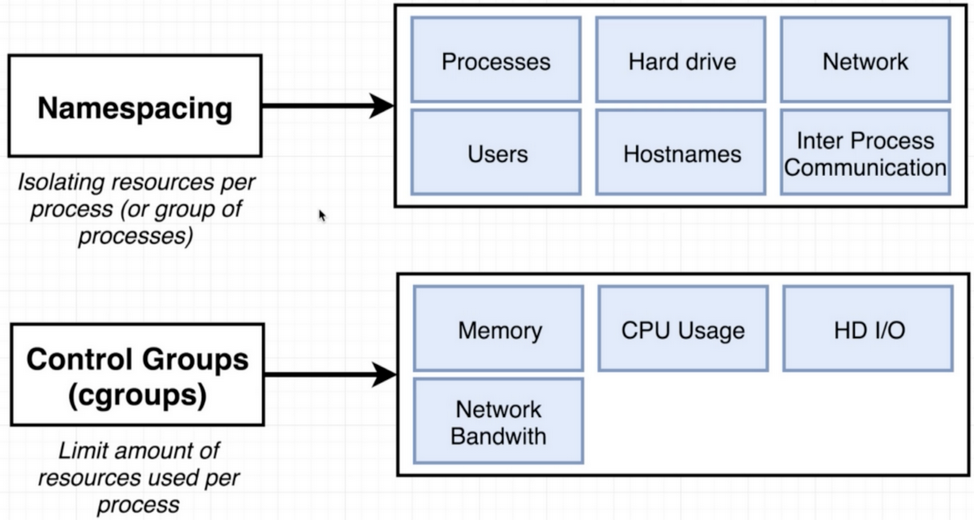

- Docker takes advantage of the kernel's property of namespacing (isolating resources per process or group of processes, e.g. when a process needs a specific portion of the actual hardware such as the hard drive, but not the rest) and control groups (cgroups) (limiting the amount of resources - RAM, CPU, HD I/O, network bandwith, etc. per process or group of processes)

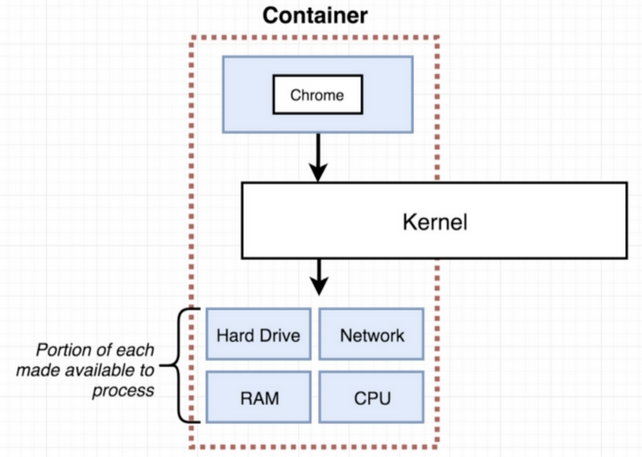

- So a container is basically a process whose system calls are redirected to a namespaced portion of dedicated hardware (HD, RAM, network, CPU, etc.) through the host's kernel

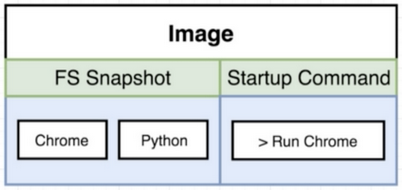

- An image is essentially a read-only filesystem snapshot with a startup command

- Docker's architecture:

Install docker on Debian⚓︎

Reference here)

- Compare your Debian version (in

/etc/issue) with the current installation requirements - Set up the stable Docker repository with

sudo apt-get update sudo apt-get install ca-certificates curl gnupg lsb-release sudo curl -fsSL https://download.docker.com/linux/debian/gpg | sudo gpg --dearmor -o /usr/share/keyrings/docker-archive-keyring.gpg sudo echo "deb [arch=$(dpkg --print-architecture) signed-by=/usr/share/keyrings/docker-archive-keyring.gpg] https://download.docker.com/linux/debian $(lsb_release -cs) stable" | sudo tee /etc/apt/sources.list.d/docker.list > /dev/null - Install the Docker engine:

sudo apt-get update sudo apt-get install docker-ce docker-ce-cli containerd.io - Test the installation with:

docker --version - Create a new user group called 'docker' and add your user to it:

Test the last commands by running

sudo groupadd docker sudo usermod -aG docker $USER newgrp dockerdockerwithout having to prefacesudo. - Configure Docker to start on boot:

sudo systemctl enable docker.service sudo systemctl enable containerd.service - The docker daemon configuration is stored in

~/.docker/config.json

Administration⚓︎

Images and containers⚓︎

docker image ls: all the images in the docker host's cache

Images are referred to with

USER/REPOSITORY:TAG"Official" images live at the 'root namespace' of the registry, therefore you can call them by the repository name without specifying the

USER(es.nginx:latest)Tags are basically a specific image commit into its repository, similarly to Git. Multiple tags can refer to the same image ID.

-

docker image history nginx:latest: list all the layers in thenginx:latestimage (sorted by datetime descending) -

docker container ls -al: all the containers with all the statuses (running, created, exited, stopped, etc) -

docker container run --detach --name postgres14 --publish 5432:5432 --env POSTGRES_USER=root --env POSTGRES_PASSWORD=secretpassword postgres:14-alpine: runs a new detached instance of thepostgres:14-alpineimage by publishing the container's port5432(syntax is--publish HOST:CONTAINER) and using the specified environment variables -

docker container run --interactive --tty --name ubuntu ubuntu bash: will overwrite the startup command included with theubuntu:latestimage with thebashcommand, thus opening an interactive pseudo-tty shell -

docker image prune --all: deletes all dangling images, check before deleting withdocker images --filter "dangling=true"

Info

How to pull the image of a specific distro (es. Alpine) without specifying the tag version? ( to be tested): get all the tags of a specific

image in a list (you will need the JSON processor jq, just use apt-get install jq) and filtering them by distro with grep:

wget -q https://registry.hub.docker.com/v1/repositories/postgres/tags -O - | jq -r '.[].name' | grep '\-alpine'

postgres with your image name

-

docker container cp FILE CONTAINER_NAME:/: it copiesFILEin the root folder of theCONTAINER_NAME -

docker container stop CONTAINER_NAME_OR_ID: it sends aSIGTERMsignal to the primary process inside the container, letting it shut down on its own time and with its own clean-up procedure -

docker container kill CONTAINER_NAME_OR_ID: it sends aSIGKILLsignal to the primary process inside the container, shutting it down immediately; it's automatically used by the Docker Server if the container's process does not respond to thedocker stopcommand within 10 seconds. -

docker system prune: it removes all stopped containers, all networks not used, all dangling images, all build cache -

Get the docker image ID by its name (

IMAGE-NAME):docker images --format="{{.Repository}} {{.ID}}" | # Reformat the output of 'docker images' grep "IMAGE-NAME" | # Find your image cut -d' ' -f2 # Cut the output and pick the ID -

docker system dfgives you stats about the occupied space, likeTYPE TOTAL ACTIVE SIZE RECLAIMABLE Images 8 0 1.665GB 1.665GB (100%) Containers 0 0 0B 0B Local Volumes 6 0 205.1MB 205.1MB (100%) Build Cache 0 0 0B 0B -

Remove all Exited containers: it may occur that some containers with running processes are in the

Exitedstatus and therefore won't be deleted with thedocker container rmcommand - or, the specific ID will be removed and immediately replaced with another one. Then just run:docker rm $(docker ps -a -f status=exited -q)

Network drivers and aliases⚓︎

Reference here.

docker network ls: all the networks in docker

Remember that the

bridgedefault network does not support the internal DNS - which you can find in any new bridge network created withdocker network create --driver bridge NETWORK. So, it's a best practice to always create your custom networks and attach your containers to them (withdocker network connect NETWORK CONTAINER).

-

docker network inspect --format "{{json .Containers }}" bridge | jq: lists all the containers connected to the default docker networkbridge -

docker network inspect --format "{{json .IPAM.Config }}" bridge | jq: gives the IP range used by the default docker networkbridge -

docker container inspect --format "{{json .NetworkSettings.IPAddress }}" nginx | jq: thenginxcontainer's internal IP address read from theinspectoutput (use the container's hostname instead of IP address... containers really are ephemeral!)

-

docker network connect NETWORK CONTAINER --alias ALIAS: will set theALIASof the container in the network.

Multiple containers can even have the same alias. That's used for :material-wiki: DNS Round-Robin, a form of DNS-based load-balacing test.

Using a makefile to speed up the docker commands

To avoid typing long bash commands, automate the most usual ones with a Makefile (also a tutorial at Makefiletutorial). The Makefile follows the syntax:

target: prerequisites

command

command

command

# Variables

containername = YOUR-CONTAINER-NAME

dbname = YOUR-DB-NAME

dbuser = YOUR-DB-USER

dbpassword = YOUR-DB-PASSWORD

runbash: # It opens a bash shell on the target container

sudo docker exec -it $(containername) bash

runpostgres: # It runs a PostgreSQL container

sudo docker run -d --name $(containername) -p 54325:5432 -e POSTGRES_DB=root -e POSTGRES_USER=$(dbuser) -e POSTGRES_PASSWORD=$(dbpassword) postgres:14-alpine

createdb: # It creates the PostgreSQL database in the container

sudo docker exec -it $(containername) createdb --username=$(dbuser) --owner=$(dbuser) $(dbname)

dropdb: # It drops the PostgreSQL database in the container

sudo docker exec -it $(containername) dropdb $(dbname)

migrateup: # It performs a forward db migration

migrate -path db/migrations -database "postgresql://$(dbuser):$(dbpassword)@localhost:54325/$(dbname)?sslmode=disable" -verbose up

migratedown: # It performs a backward db migration

migrate -path db/migrations -database "postgresql://$(dbuser):$(dbpassword)@localhost:54325/$(dbname)?sslmode=disable" -verbose down

runpsql: # It opens a psql shell on the target container

sudo docker exec -it $(containername) psql $(dbname)

.PHONY: runbash runpostgres createdb dropdb runpsql migrateup migratedown

make! 🎉🎊 For example:

make runbash # It will open a bash shell on the specified container

make runpostgres # Create the container and start it

make createdb # Create the database

make migrateup # Run the first migration to create the schema

make runpsql # Start the psql CLI

cat -etv Makefile to look for missing tabs (^I).

Troubleshooting⚓︎

Learn on a running container:

docker run -d IMAGE_NAME ping google.com, where theping google.comcommand overrides the default image's startup command and leaves the container always running

Warning

The error docker: Error response from daemon: driver failed programming external connectivity on endpoint ...: Error starting userland proxy: listen tcp4 0.0.0.0:5432: bind: address already in use means that the specified local port (i.e. in the example, the bind 0.0.0.0:5432) is already used by another process... Just change the host port .

-

docker container exec --interactive --tty postgres14 psql -U root: it will start a command (the one specified after the image's name, herepsql -U root) running in addition to the startup command -

docker container logs CONTAINER_NAME_OR_ID: it shows the logs of the specifiedCONTAINER_NAME_OR_ID. This is the same output as running the container without the--detachflag.

Developing⚓︎

An example of Dockerfile for a Python application (references here) with multiple 'stanzas'.

Here you can find some best practices for writing Dockerfile.

# syntax=docker/dockerfile:1

# Base image

FROM python:3.8-slim-buster

# Container's default location for all subsequent commands

WORKDIR /app

# Copy command from the Dockerfile local folder to the path relative to WORKDIR

COPY requirements.txt requirements.txt

# Running a command with the image's default shell (it can be changed with a SHELL command)

RUN pip3 install -r requirements.txt

# Copy the whole source code

COPY . .

# Command we want to run when our image is executed inside a container

# Notice the "0.0.0.0" meant to make the application visible from outside of the container

CMD [ "python3", "-m" , "flask", "run", "--host=0.0.0.0"]

-

CMDarguments can be over-ridden:cat Dockerfile FROM ubuntu CMD ["echo"] $ docker run imagename echo hello helloENTRYPOINTarguments can NOT be over-ridden:cat Dockerfile FROM ubuntu ENTRYPOINT ["echo"] $ docker run imagename echo hello echo hello -

To install an unpacked service using its executable on Docker, use the following Dockerfile:

Where theFROM mcr.microsoft.com/windows/servercore:ltsc2019 WORKDIR /app COPY . "C:/app" RUN ["C:/Windows/Microsoft.NET/Framework/v4.0.30319/InstallUtil.exe", "/i", "EXECUTABLE_NAME.exe"] SHELL ["powershell", "-Command", "$ErrorActionPreference = 'Stop'; $ProgressPreference = 'SilentlyContinue';"] CMD c:\app\Wait-Service.ps1 -ServiceName 'SERVICE_NAME' -AllowServiceRestartWait-Service.ps1script is here.